Motion Capture is a distinct form of animation that has come far from its debut in 1915 to the modern day with much more room for improvement and progression.

Motion Capture animation uses a set of cameras to record the movement of the actor. Often using a mocap suit (A suit with markers for the camera to track) to help in the recording.

The recording is then used on a 2D or 3D character which the computer will animate to simulate the recording.

Mocap could quite easily be compared to the older animation of rotoscoping as seen in Disney’s Snow White (1937) Which used stop motion to emulate a smooth, human like movement in the characters animation.

In Comparison to the modern examples of motion capture such as Planet of the Apes (2017) in which the method is used to create animation that is indistinguishable from reality. It is evident that Motion Capture animation has come a long way, but what does that mean for other forms of Animation?

While motion capture is an extremely efficient method to imitate realistic movement it is not uncommon to use manual forms of animation to polish the animation. Furthermore, assuming realism isn’t the desired effect then perhaps manual animation is better overall. Manual animation offers a unique aesthetic to the media it is applied to, making them appealing and eye-catching to a wide-range of audiences. A good example of a modern game becoming popular due to its creative application of manual animation is the game Undertale (2015) Which gained an extensively large fan-base due to its distinctive style.

There are many different styles of manual animation such as Clay Animation, Puppet Animation, Stop Motion and more and there are just as many 2D CGI based animated movies that give themselves a distinct, unique and memorable animated style. Mostly used in children’s movies to create an exaggerated style of animation such as in Toy Story (1996) or the The Incredibles 2 (2018)

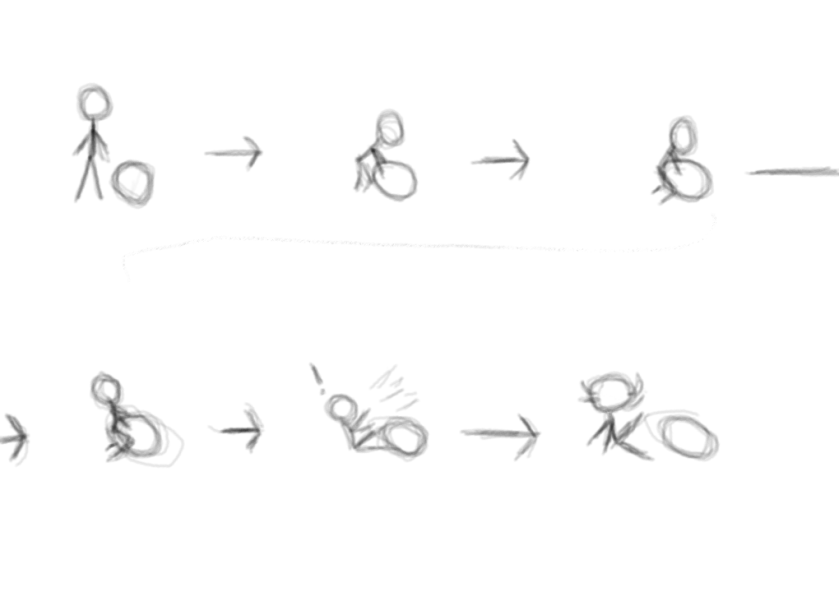

Stop-Motion is a form of animation in which objects are moved slightly with each frame creating an illusion of independent movement when the frames are played in quick succession. Stop-Motion is a widely known and accessible method of animation being used in the simplest of animations. While Stop-Motion is not used as directly anymore a lot of modern animation styles use the similar premise such as Clay Animation used in things such as Chicken Run (2000) or Puppet Animation such as Coraline (2009)

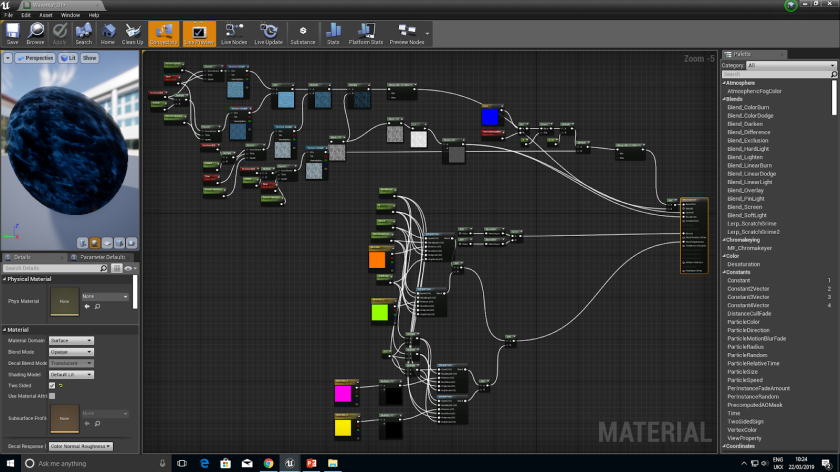

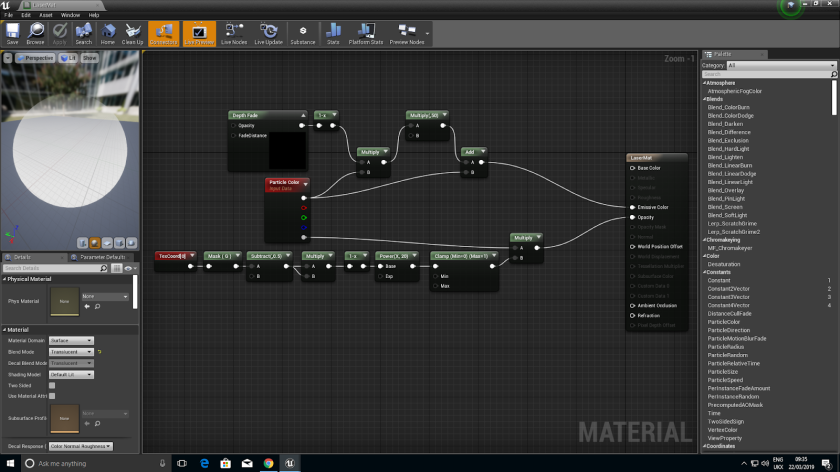

Another method of manual animation is in CGI or Computer Animation.

Computer Animation usually consists of two methods. Those being Stop-Motion or Point to Point animation. Stop-Motion CGI uses the same methods as mentioned in Stop-Motion before. Point to Point is a similar premise however only certain frames are specifically created and the computer fills in the gaps. These methods are used for both 2D and 3D CGI however 3D Animation introduces the use of a rig which is similar to that of the meshes skeleton which the computer will use to determine the parts of a meshes specific parts during an animation.

These methods of Animation allow the animators to create motions without the limitations of realism or physics. This benefit is often seen in Children’s/Family movies due to the access of techniques such as squash and stretch which cannot be created in Motion Capture as well as more extreme animation. The lack of physics and realism requirements also allow the methods to be used in Fantasy or Fiction movies to animate non-humanoid creatures or characters such as dragons in the Game of Thrones series.

While these forms of animation offer the benefit of less limitation they also have more room for mistakes and errors. Stop-Motion is a time consuming method as you have to alter the object’s position for each individual frame of the motion. Manual CGI is also time consuming and depending on the method use the computer might deform the mesh during the Point to Point animation process.

Motion Capture (Sometimes known as Performance capture) outshines Manual animation in a variety of ways allowing the animators to create a smooth and realistic movement within their character. While Motion Capture animation is used in both movies and games I believe games present it’s capabilities, benefits as well as flaws much more clearly than that of a movie due to the different types of rendering.

Mocap could quite easily be compared to a more developed version of rotoscoping which was used to create old animated disney movies such as Cinderella and Alice in Wonderland, it was even used for anime movies such as The girl who leapt through time. Rotoscoping uses the similar method of recording the movements of an actor and copying it to the character. However, rotoscoping requires someone to trace each frame of the recording whereas motion capture will be animated by the computer.

While Mocap was attempted in earlier games, the first game to use true motion capture was an early 3D game arcade game (possibly one of the first) Virtua Fighter 2 (1994). Virtua Fighter 2 was praised heavily for it’s graphics. Motion capture has clearly improved since the release of Virtua Fighter 2 as seen in the modern remaster of Resident evil 2 (2019) which utilises an eye-catching, realistic style of animation and in comparison to Virtua Fighter 2 it displays the progression of motion capture in games.

Mocap is capable of easily capturing secondary actions and recreating complex movements in an easy and realistic manner. Due to the animation being mostly automated aside from animators possibly polishing it through traditional methods, Mocap as a lot more efficient in both performance and cost.

“Nothing to do with 3D animation is cheap, motion capture included. But, like anything digital, prices have come way down as of late. On the low end of the scale, you or I can do markerless motion capture at home with a Kinect and iPi Motion Capture software for $295. On the other end of the scale, EA’s new Capture Lab (pictured below) covers 18,000 square feet, and uses the latest Vicon Blade mocap software and 132 Vicon cameras. We don’t know exactly how much that cost them, but a two-camera Vicon system with one software license is $12,500. (Bear in mind that you’ll also need software like MotionBuilder to map the capture data to a character, which runs about $4,200 per seat.) Despite those prices, doing motion capture reportedly costs anywhere from a quarter to half as much as keyframe animation, and results in more lifelike animation.”

Motion Capture can be used in a variety of situations such as underwater though it is much harder to capture underwater due to the reflectiveness and distortion of the water making it difficult for the camera’s to trace the location of the markers or for facial animation (In which case it is often called Performance capture) To capture the more complicated motions in a human face to capture higher ranges of emotion as well as make the character feel more organic and real.

Originally, motion capture was used solely to animate humans while the animation of animals would performed with traditional, manual methods such as CGI. However, overtime motion capture became accessible for animals as well. Allowing smooth, realistic animations of animals without undergoing the time consuming process of manual animation.

An example of Motion Capture animation taking over for CGI animation is in the Harry potter series.

Originally Characters such as Dobby or Kreacher would be represented by sticks or statues (if represented at all) to be placed into the scene through CGI later, leaving the actors to react to nonexistent elves though overtime they began to animate the elves through the use of mocap making the job not only easier on animators but actors as well.

Though, this didn’t remove CGI entirely. Still being used to develop thing such as the Troll, Grawp, Thestrals and more.

It could be said that Motion capture is not only used with animation but also has begun to be implemented as a gameplay aspect by things such as Playstation Move or Xbox Kinect in a method called Positional Tracking. While they do not work exactly the same. They follow the same premise of using a camera to capture and record the motion of a human actor Playstation move even using an LED light as a tracker similar to the markers on a mocap suit. In some games the tracked motion is represented on an in game character using the movements of the player to animate them though in most cases these animations are broken and buggy.

A good example of motion capture being used as a gameplay aspect is in the popular Just Dance series not only uses motion capture as its animation style but also tracks the movements of the player as an essential aspect of it’s gameplay.

Positional tracking is also an essential aspect in things such as AR or VR following the same aspect of using a camera to track the motion and position of the VR headset. This method of head and eye tracking is crucial to the device as it allows the device to determine the position of the user’s head which will be used when altering the players field of view to suit where they are looking.

A great example of facial performance capture with in games is in Injustice 2 (2017) which was widely praised for its incredibly realistic facial animation which was most visible in the character Harley Quinn due to having more exaggerated facial expressions.

While facial performance capture is highly impressive and realistic. It can sometimes create abnormal facial expressions causing the Uncanny Valley effect which was prominent in the game Until Dawn (2015)

As well as creating the Uncanny Valley effect in Facial Performance capture. Mocap also restricts the animation to being extremely realistic. Physic defying actions such as double jumping cannot be captured to a realistic standard. Furthermore. Unique character models and meshes can restrict how well they can be animated with motion capture for example a character with exaggeratedly large hands or can’t be animated with motion capture due to having an inhuman design.

For this reason most developers will choose to use the faces of a characters actor to prevent errors in the characters facial animation, for example in the upcoming game Mortal Kombat 11 the revealed character Sonya Blade has been voiced by and modelled after the popular female WWE wrestler Ronda Rousey. This capability not only allows the developers to have a direct reference to a character’s facial structure and movements but also allows a convenient way to implement celebrity endorsement in their games, encouraging players to purchase the game on account of a celebrities appearance within it.

Motion captures convenient way to make animating a character model much easier while also applying celebrity endorsement is most often seen in Sports games such as the WWE or UFC series which use characters based entirely on real celebrities involved with said sport even using them as the cover image for their games. While this form advertising isn’t created by Motion Capture, the animations the style of animation definitely makes it much easier to accomplish as it allows developers to make their characters look and act just like the real person would.

Overall, I believe that while Motion Capture animation has definitely grown and come to a point where it can be considered much better than that of Manual animation, even being used for much more than just animation but as well as an important utility to consoles in the form of positional tracking which borrows the same method of having a camera track an external actor through the use of a marker. it may not entirely replace Manual Animation due to its limitations. Often animators will use a mix of the two methods, using Manual techniques to polish Motion Capture. Both methods have their pros and cons and most consumers don’t actually have a preference on which animation style is used within the Movies or Video game they watch/play.

Bibliography:

IGN. 2014. A BRIEF HISTORY OF MOTION-CAPTURE IN THE MOVIES. [ONLINE] Available at: https://uk.ign.com/articles/2014/07/11/a-brief-history-of-motion-capture-in-the-movies. [Accessed 16 February 2019].

Motion capture – Wikipedia. 2019. Motion capture – Wikipedia. [ONLINE] Available at: https://en.wikipedia.org/wiki/Motion_capture#Facial_motion_capture. [Accessed 01 March 2019].

Animation – Wikipedia. 2019. Animation – Wikipedia. [ONLINE] Available at: https://en.wikipedia.org/wiki/Animation#Traditional_animation. [Accessed 01 March 2019].

Engadget. (2014). What you need to know about 3D motion capture. [online] Available at: https://www.engadget.com/2014/07/14/motion-capture-explainer/ [Accessed 10 Mar. 2019].

En.wikipedia.org. (2019). Virtua Fighter 2. [online] Available at: https://en.wikipedia.org/wiki/Virtua_Fighter_2 [Accessed 12 Mar. 2019].

En.wikipedia.org. (2019). Positional tracking. [online] Available at: https://en.wikipedia.org/wiki/Positional_tracking [Accessed 14 Mar. 2019].